Several factors affect the quality of a digital image. For one, the lenses of the camera, especially for single-lens cameras, distort the captured image. In our previous activity, we performed image processing techniques to correct such distortions. Aside from distortion, noise which is random variation of brightness and color information of images[2], also cause undesirable degradation to the captured image that usually arises during acquisition. For instance, temperature which alters the response of a camera sensor affects the amount of noise in an image[1]. In restoring such images, we use our knowledge of the degradation phenomenon, model it and perform an inverse process in order to recover the image.

Noise are composed of random variables characterized by certain probability distribution function (PDF)[1]. In this activity, we explore on most common noise models found in image processing applications as well as some basic noise-reduction filters used in image restoration.

Noise Models

Gaussian noise:

p(z) = (1/√ 2πσ )e-(z-μ)2/2σ2

Rayleigh noise:

p(z) = (2/b)(z - a)e-(z-a)2/b for z > a else p(z) = 0

Erlang (Gamma) Noise:

p(z) = [abzb-1/(b-1)!] e-az for z > a else p(z) = 0

Exponential Noise:

p(z) = ae-az for z > a else p(z) = 0

Uniform Noise:

p(z) = 1/(b-a) if a < z < b else p(z) = 0

Impulse (salt an pepper) Noise:

p(z) = Pa for z = a

p(z) = Pb for z = b

p(z) = 0 otherwise

p(z) = Pb for z = b

p(z) = 0 otherwise

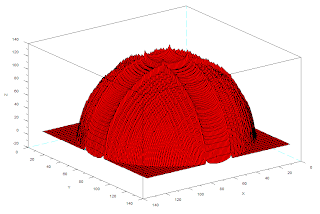

The figure below demonstrates the effect of each noise PDF to an image. Here we initially observe a grainy effect of the noise.

Figure 1. (left) Original image and (right) the image added with (clockwise from upper left) Gaussian, gamma, exponential, Rayleigh, uniform and, salt&pepper noise.

From the original grayscale value histogram of the image (shown in Figure 2), upon application of the noise to the image, the histogram was transformed as in Figure 3.

Figure 3. Histogram of grayscale values from the generated noisy images: (clockwise from upper left) Gaussian, gamma, exponential, Rayleigh, uniform and, salt&pepper noise.

Basic noise-reduction filters

These filters takes a window Sxy in an image with center x,y and perform averaging.

Arithmetic mean filter (AMF)

Basic noise-reduction filters

These filters takes a window Sxy in an image with center x,y and perform averaging.

Arithmetic mean filter (AMF)

CMF is good in removing salt&pepper noise. If Q>0, it removes pepper noise. If Q<0, it removes salt noise.

Restoration

Restoration

We have tested the effect of the window size to the quality of the reconstructed image. The figure below shows the reconstructed images using the four filters for sizes of Sxy = {3x3, 5x5, 9x9}. We observe that smaller window size resulted to a relatively grainy restoration than with that of larger window size. On the other hand, large window size resulted to a more blurry image. In the following reconstructions, we used a window size of 5x5.

Figure 4. Reconstructed image of Gaussian-noised image using (from top to bottom) AMF, GMF, HMF and CMF (Q= 1) at different window sizes (left to right) 3x3, 5x5, 9x9.

Here we show the effects of the filters to the noised images.

Here we show the effects of the filters to the noised images.

Figure 5. (right)Reconstruction of (left)noisy images: (top to bottom) Gaussian, gamma, exponential, Rayleigh, uniform and, salt&pepper noise using (from 2nd column to the right) AMF, GMF, HMF and CMF (Q=1).

From Fig. 5, we see that for all noised images, the AMF produced a consistent results although may not be the best result. This technique can thus be use generically for noisy images. GMF also produced a very good restoration for all noised images except for the salt&pepper-noised image. HMF is also resulted to a good reconstruction except for Guassian and salt&pepper-noised images. CMF (Q= 1) produced a good result except for exponential and salt&pepper-noised image. Salt&pepper-noised image was harder to restore compared to others. Moreover, we see that CMF (Q=1) was not able to correctly restore a salt&pepper-noised image, as previously said. Now we demonstrate the effect of CMF to salt-only and pepper-only-noised image.

Figure 6. Image reconstruction of Q = 1 and Q= -1 CMF for (top to bottom) the image with salt and pepper, salt-only and pepper-only noise. CMF cannot properly reconstruct a salt& pepper-noised image. Salt is removed when Q is negative. Pepper is removed when Q is positive.

We indeed see that CMF can perfectly restore images with salt noise and pepper noise alone.

Figure 7. Test image taken from [3]

Figure8. Reconstruction of a Gaussian-noised image.

Figure 9. Reconstruction of a gamma-noised image.

Figure 10. Reconstruction of a exponential-noised image.

Figure 11. Reconstruction of a Rayleigh-noised image.

Figure 12. Reconstruction of a uniform-noised image.

Figure 13. Reconstruction of a salt&pepper-noised image.

In this activity, I give myself a grade of 10 for finishing it well.

I thank Irene, Janno, Martin, Master, Cherry for the help.

References:

[1] AP186 Activity 18 manual

[2] http://en.wikipedia.org/wiki/Image_noise

[3] http://bluecandy.typepad.com/.a/

Figure 7. Test image taken from [3]

Below are reconstructions of noised images using (from upperleft clockwise) AMF, GMF, CMF, and HMF.

Figure8. Reconstruction of a Gaussian-noised image.

Figure 9. Reconstruction of a gamma-noised image.

Figure 10. Reconstruction of a exponential-noised image.

Figure 11. Reconstruction of a Rayleigh-noised image.

Figure 12. Reconstruction of a uniform-noised image.

Figure 13. Reconstruction of a salt&pepper-noised image.

In this activity, I give myself a grade of 10 for finishing it well.

I thank Irene, Janno, Martin, Master, Cherry for the help.

References:

[1] AP186 Activity 18 manual

[2] http://en.wikipedia.org/wiki/Image_noise

[3] http://bluecandy.typepad.com/.a/