The human brain has the capacity to discriminate from one object to another. From the stigma it receives from the eyes (-or from other sensory organs), one of the things that human does is to find significant features unique to the objects and draw,as well, overlapping features between different objects that will allow to recognize things. The aim of computer vision is to approximate the pattern recognition capabilities of the human brain. Various features can be found in a certain object or pattern -- color, size, shape, area, etc. We use such features to identify an object as a member of a set that has the same properties (this set is called a class).

In this activity, we are tasked to classify objects according to their classes using their physical features. We should be able to (1) find the correct features that will effectively separate the classes and (2) create a classifier (or an algorithm) that is suitable for the task.

In Task 1, we determine the useful features and train a representative set of objects of known class and calculate the feature values (for example, area). An average value per feature is taken from the whole class.

Figure 1. Dataset of classes used that contains a set of short and long leaves, flowers, and 25-cent and 1-peso coins.

In this activity, we are tasked to classify objects according to their classes using their physical features. We should be able to (1) find the correct features that will effectively separate the classes and (2) create a classifier (or an algorithm) that is suitable for the task.

In Task 1, we determine the useful features and train a representative set of objects of known class and calculate the feature values (for example, area). An average value per feature is taken from the whole class.

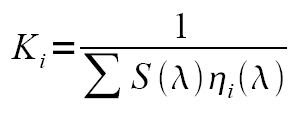

where mj is the average for all feature xj of class wj. It is a 1xn matrix for all features n. These average values will be used as the basis for classification. In our case, we used the r-g chromaticity (discussed in Activity 12)and dimension ratio (length/width) as features for the set of classes we gathered (image is shown below).

Figure 1. Dataset of classes used that contains a set of short and long leaves, flowers, and 25-cent and 1-peso coins.

Once the mj for all the classes are done, we are now ready to classify a test object based on its features. We extract all the needed features x and determine what class it belongs to. For Task 2, we used the Euclidean distance as a classifier for our test objects. It is given by:

dj(x) = xTmj - 1/2 mjTmj

This determines how close the features x of an object to the features of class j. The object will be classified as belonging to a class which yields the largest dj.

Results

Results

Below is 3D plot of features extracted from the objects in our dataset. We readily see an obvious separation of class clusters of the leaves (short and long) and of the flowers. One reason is that the color of the flower is different from the color of the leaves. The difference in the dimension ratio of the short leaves and long leaves has also separated the two leaf types. However, class clusters of the coins were not well separated. Based on the criteria used, the dimension ratio cannot possibly separate the two since both are circular (dimention ratio ~ 1). Only the color determined by the r-g chromaticities will be able to separate them.

Figure 2. Separation of class clusters using the r, g and dimension ratio as features

We take a look at the distribution of points using only the r-g chromaticities -- Fig.1 as viewed from the above. Here, we see that there is an overlap between the clusters of 1-peso and 25-cent coins. In r-g chromaticity diagram (see Fig. 3 in Activity 12), the color yellow and white, which are the colors of the 25-cents and 1-peso, respectively, lie close to each other. It is thus expected that the clusters be consequently close. The overlap is caused by the highly reflective surface of the coins. In our case, a 25-cent coin is misidentified as a 1-peso coin. Highly reflective 25-cents (the new ones) will appear as though it is a 1-peso coin.

Figure 3. r-g chromaticities of the samples. A single data point overlap of the between two clouds of data points -- cloud of peso coins (inverted triangle) and 25 centavo coins (filled diamonds) is seen indicating that the r-g chromaticity wrongly classifies a 25-cent as a 1-peso coin.

From the plots, we can already observe whether the features we used is capable of classifying objects. However, this can only be handy when dealing with at most 3 features. More than this will be very hard to visualize. The advantage of a classifier such as the Euclidean distance is that it essentially decomposes all the features into one number, thereby resulting to easier classification of objects. Below is a table of test objects and there classification using the algorithm.

From the plots, we can already observe whether the features we used is capable of classifying objects. However, this can only be handy when dealing with at most 3 features. More than this will be very hard to visualize. The advantage of a classifier such as the Euclidean distance is that it essentially decomposes all the features into one number, thereby resulting to easier classification of objects. Below is a table of test objects and there classification using the algorithm.

Table 1. List of Euclidean distances of the samples from the training set. Sample x - i are samples taken from a set of samples i where i = {peso, 25 cents, long leaf, short leaf, flower}.

Values highlighted with yellow belong to the column where the samples are classified. For example, Sample 1-1 resulted the highest value at dpeso hence classified as a 1-peso coin. All the classifications were correct except for one sample. This is a 25-cent coin wrongly classified as a 1-peso coin. The features used are 96% accurate in classification.

Note that the features used are not enough to completely separate the classes of coins. One can use the area as a feature to be able to do such.

In this activity, I give myself a grade of 10 for doing the job well.

I thank Luis, Mark Jayson and Irene for useful discussions. Especial thanks to Mark Jayson, Irene and Kaye for lending the dataset.

Note that the features used are not enough to completely separate the classes of coins. One can use the area as a feature to be able to do such.

In this activity, I give myself a grade of 10 for doing the job well.

I thank Luis, Mark Jayson and Irene for useful discussions. Especial thanks to Mark Jayson, Irene and Kaye for lending the dataset.