Objects have their innate color reflectance. The amount and color of light, however, does not depend on reflectance alone. Light reflected also depends on the spectrum of the light source that illuminates the object. Moreover, the device used to capture effective reflectance also comes into play. Cameras are one of these devices. Cameras have their own spectral sensitivities like the eye and thus render colors they detect depending on such sensitivities.

Images are rendered in different modes -one of which is in RGB mode represented in the following equations.

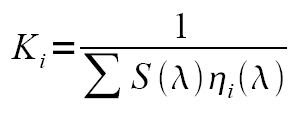

ρ(λ) and S(λ) are the reflectance and light source, respectively and, η(λ) are the spectral sensitivities of the camera (subscripts indicate the channel sensitivity - red, green and blue). Ki are called the white balancing constants of the form:

These constants are important so that the camera renders the correct reflectance of an object. Digital cameras have this option to change their white balance depending on the current source that illuminates on the object i.e. cloudy, sunny, fluorescent, etc. When the white balance of the camera does not match the light source, what happens is that the reflectance of the object is rendered incorrectly by the camera. In such case, we say that the camera is improperly white balanced. We can readily see this if, for example, we see a known white object as a non-white (bluish probably). When faced with such images, image processing techniques has to be done to correct them.

In this activity, we explore two of the white balancing algorithms used for such images -- the White Patch algorithm and the Gray World algorithm.

Algorithms

White Patch (WP) algorithm is implemented by first finding a patch of a known "white" object in the image. The average RGB values (Rw Gw and Bw) of the entire patch is calculated. All 3 channels in the image is normalized using those average values. Essentially, we force the "white patch" to be white. As such, all the other pixels are affected also.

Gray World (GW) algorithm, on the other hand, has the same implementation as the WP algorithm only that the image is normalized by the average RGB values of the entire image. The algorithm , given an image with a sufficient amount of color variations [1], assumes that the average color of the world is gray - a family of white. In this case, we force the image to have a common gray value. As a result, the effect of the illuminant on the image is removed. The image then becomes white-balanced.

Below are images of a scene under constant lighting environment captured by a Motorola W156 camera phone with different white balancing (WB) settings. Both algorithms were performed in each image.

Fig1. (left column)Images containing different colors under different WB settings of the camera and, white-balanced images using (middle) WP and (right) GW algorithms.

In this part we now use the two algorithms onto monochromatic images.

Figure 2. Monochromatic images capture using different WB camera settings and the reconstructed images using the two algorithms.

In general, GW algorithm is more convenient to use than WP. It is easier to implement since we do not need to find white patches in the image and very handy when dealing with colorful images.

I give myself a grade of 9/10 in this activity since I was able to explain the strengths and limitations of the algorithms.

I thank Orly, Thirdy and Master for useful discussions.

References:

[1]http://scien.stanford.edu/class/psych221/projects/00/trek/GWimages.html

In this activity, we explore two of the white balancing algorithms used for such images -- the White Patch algorithm and the Gray World algorithm.

Algorithms

White Patch (WP) algorithm is implemented by first finding a patch of a known "white" object in the image. The average RGB values (Rw Gw and Bw) of the entire patch is calculated. All 3 channels in the image is normalized using those average values. Essentially, we force the "white patch" to be white. As such, all the other pixels are affected also.

Gray World (GW) algorithm, on the other hand, has the same implementation as the WP algorithm only that the image is normalized by the average RGB values of the entire image. The algorithm , given an image with a sufficient amount of color variations [1], assumes that the average color of the world is gray - a family of white. In this case, we force the image to have a common gray value. As a result, the effect of the illuminant on the image is removed. The image then becomes white-balanced.

Below are images of a scene under constant lighting environment captured by a Motorola W156 camera phone with different white balancing (WB) settings. Both algorithms were performed in each image.

Fig1. (left column)Images containing different colors under different WB settings of the camera and, white-balanced images using (middle) WP and (right) GW algorithms.

Comparing the raw images from the reconstructed images using either of the algorithms, we see that we have successfully implemented the techniques. The background (which is in actual white) in the raw images initially does not appear white. For example, the background of the image using indoor WB camera settings appears blue but now appears white upon white balancing.

Also, we observe that the both algorithms produces different results - which is expected since they have different implementation. It can be seen that the images produces using GW algorithm is much brighter than that of the WP algorithm. In this case, we can infer that after the GW implementation, a lot of pixel values saturated which means that these pixel values exceed the supposedly maximum value of 1. This is not what we want. Here we see the disadvantages of the the GW algorithm. One is that, the image must satisfy a condition (that it must contain a sufficient amount of color variations) for the GW algorithm to work. Another is that since the algorithm normalizes the image using the average RGB values of the image it is expected that saturation of RGB values will occur.

Also, we observe that the both algorithms produces different results - which is expected since they have different implementation. It can be seen that the images produces using GW algorithm is much brighter than that of the WP algorithm. In this case, we can infer that after the GW implementation, a lot of pixel values saturated which means that these pixel values exceed the supposedly maximum value of 1. This is not what we want. Here we see the disadvantages of the the GW algorithm. One is that, the image must satisfy a condition (that it must contain a sufficient amount of color variations) for the GW algorithm to work. Another is that since the algorithm normalizes the image using the average RGB values of the image it is expected that saturation of RGB values will occur.

In this part we now use the two algorithms onto monochromatic images.

Figure 2. Monochromatic images capture using different WB camera settings and the reconstructed images using the two algorithms.

From the figure above, we see that the reconstruction of the images using the WP algorithm is way better than the GW algorithm. Again, we go back to the first condition an image must satisfy for the GW to work. Since the images are monochromatic; that is, they do not contain a variety of colors, GW is not appropriate to use.

Strengths and Weaknesses of Each Algorithm.

From the results obtained, we can pretty much gauge the strengths and weaknesses of each algorithm. The GW algorithm does not need any white patch to reconstruct the image hence it is useful when one does not know anything about the image.

Based on the results, we can infer that WP algorithm can work whether the images are monochromatic or colorful. And algorithm can be generically used for white balancing images. However, this will only work if one knows a "white" object in the image.

Strengths and Weaknesses of Each Algorithm.

From the results obtained, we can pretty much gauge the strengths and weaknesses of each algorithm. The GW algorithm does not need any white patch to reconstruct the image hence it is useful when one does not know anything about the image.

Based on the results, we can infer that WP algorithm can work whether the images are monochromatic or colorful. And algorithm can be generically used for white balancing images. However, this will only work if one knows a "white" object in the image.

In general, GW algorithm is more convenient to use than WP. It is easier to implement since we do not need to find white patches in the image and very handy when dealing with colorful images.

I give myself a grade of 9/10 in this activity since I was able to explain the strengths and limitations of the algorithms.

I thank Orly, Thirdy and Master for useful discussions.

References:

[1]http://scien.stanford.edu/class/psych221/projects/00/trek/GWimages.html

You deserve a 10 for your analysis.

ReplyDelete